Inter-thread communication: Since multiple threads share address space and data space, the communication between multiple threads is that the data of one thread can be directly provided to other threads for use without going through the operating system.

Therefore, the communication and synchronization methods between threads are mainly locks, signals, and semaphores. The communication between processes is different. The independence of its data space determines that its communication is relatively complicated and requires an operating system.

The communication mechanisms mainly include: pipes, well-known pipes, message queues, semaphores, shared spaces, signals, and sockets.

The following briefly introduces several communication methods between processes:

Pipeline: It transfers data in one direction, which can only flow from one party to the other, which is a half-duplex communication method; it is only used for communication between processes that are related to each other. The relationship is a parent-child process or a brother Process; There is no name and the size is limited, and the transmission is an unformatted stream, so the data communication format must be agreed upon when the two processes communicate.

The pipe is like a special file, but this file exists in memory. When the pipe is created, the system allocates a page for the pipe as a data buffer, and the process reads and writes the data buffer to complete the communication. . One of the processes can only read and write, so it is called half-duplex communication. Why can only one read and one write only? Because the writing process is writing at the end of the buffer, and the reading process is at the head of the buffer. For reading, their respective data structures are different, so their functions are different.

Famous pipe: You can get a general idea by seeing the name. The difference between it and the pipe is that it has a name. This is different from pipelines, which can only communicate between processes that are related.

It provides a path name associated with it, and has its own transmission format. Another difference between a well-known pipe and a pipe is that a well-known pipe is a device file, which is stored in the file system and can be accessed by unrelated processes, but it must read data according to the first-in, first-out principle. It is also single-duplex.

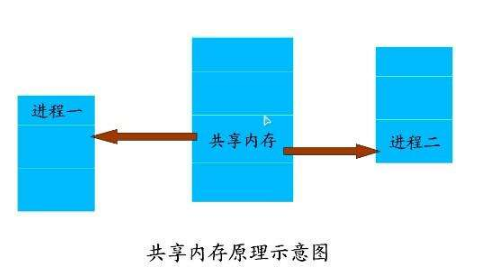

Shared memory: is to allocate a piece of memory that can be accessed by other processes. Shared memory is arguably the most useful method of inter-process communication and the fastest form of IPC. First of all, before using the shared memory area, it must be attached to the process address space or mapped to the process space through a system function. The shared memory of two different processes A and B means that the same piece of physical memory is mapped to the respective process address spaces of processes A and B. Process A can instantly see process B's update to the data in the shared memory, and vice versa.

Since multiple processes share the same memory area, some kind of synchronization mechanism is inevitably required, both mutexes and semaphores are fine. An obvious benefit of using shared memory communication is high efficiency, because the process can directly read and write the memory without any data copy. For communication methods such as pipes and message queues, four data copies are required in the kernel and user space, while shared memory only copies data twice [1]: once from the input file to the shared memory area, and the other time from the shared memory area. The memory area to the output file. In fact, when memory is shared between processes, it is not always unmapped after reading and writing a small amount of data. When there is new communication, the shared memory area is re-established. Instead, the shared area is kept until the communication is completed, so that the data content is kept in the shared memory and not written back to the file. The content of shared memory is often written back to the file when it is unmapped. Therefore, the efficiency of the communication method using shared memory is very high.

Signal: The signal is a simulation of the interrupt mechanism at the software level. In principle, the receipt of a signal by a process and the receipt of an interrupt request by the processor can be said to be the same.

The signal is asynchronous. A process does not need to wait for the signal to arrive through any operation. In fact, the process does not know when the signal arrives. Signal is the only asynchronous communication mechanism in the inter-process communication mechanism. It can be regarded as an asynchronous notification to notify the process receiving the signal what happened. After POSIX real-time expansion of the signal mechanism, the function is more powerful, in addition to the basic notification function, it can also transmit additional information. There are two sources of signal events: hardware source (for example, we pressed the keyboard or other hardware failures); software source. Signals are divided into reliable signals and unreliable signals, real-time signals and non-real-time signals. There are three ways for the process to respond to the signal 1. Ignore the signal 2. Catch the signal 3. Perform the default operation.

Semaphore: It can also be said to be a counter, commonly used to deal with process or thread synchronization problems, especially the synchronization of access to critical resources. Critical resource: A resource that can only be operated by one process or thread at a certain time. When the value of the semaphore is greater than or equal to 0, it indicates the number of critical resources that can be accessed by concurrent processes. When it is less than 0, it indicates that the critical resource is waiting to be used. The number of processes for the resource. More importantly, the value of the semaphore can only be changed by PV operations.

Shenzhen Konchang Electronic Technology Co.,Ltd , https://www.konchangs.com