Lei Feng.com: This article was translated by Tupu Technology Engineer from Google's Artificial Brain Is Pumping Out Trippy—And Pricey—Art . Lei Feng network exclusive article.

Last Friday night, Blaise Agüera y Arcas, a Google graphics expert, presented a wonderful speech to about 800 geeks in an art gallery in an old movie theater in the Mission District of San Francisco.

In his speech, he stood beside a wall that once hung with a movie screen and used projection equipment to display a double-portrait painting of the Renaissance German painter Hans Holbein 500 years ago. There is a twisted human skull in the painting. According to Blaise's interpretation, Hans Holbin is unlikely to draw this strange human skull directly. Instead, he uses a mirror or lens group to project the image of the skull onto the canvas, and then sketches out the skull. contour. "He used the most advanced technology at the time, " Blaise commented.

Through this example, what Blaise wants to express is that we have used science and technology to create art for centuries. At this point, we are not so different from the past. In this way, Blaise introduced each exhibit created by the artificial neural network in the gallery. This neural network that combines computer hardware and software is similar to the neural network of the human brain. Last year, Google researchers developed a new artificial neural network for artistic creation. This weekend, Google invested this artificial intelligence system into a two-day exhibition. The resulting image was a dedicated effort to The Gray Area Foundation for the Arts, a nonprofit organization combining art and technology, raised $84,000.

The night of the exhibition was a particularly stylish but very geeky Silicon Valley wind. "Look! This is Clay Bavor, head of Google's famous virtual reality project!". "Look, there's Josh Constine from TechCrunch (a technology media) over there." "Is this MG Siegler, who used to write for TechCrunch in the past and now often appears in the artificial neural network art exhibition? Well, I think this is him. "At the same time, this evening fully demonstrated the rapid development of artificial intelligence technology. Artificial intelligence has now reached a historical high. Artificial neural networks can not only advance the search engine of Google, but also produce artworks that people are willing to pay a lot of money for.

From Blaise's point of view, since the creation of humanity's first artwork, these were merely part of the historical process. Just as Han Holbein did, neural networks merely broadened the artistic creation. For others, this is an exciting new thing. “This is the first time I have considered the creative process of a work of art as a science project.†Alexander Lloyd, a sponsor of the Grey Field Art Foundation, said that he spent thousands of dollars on the art of creating an artificial neural network. Products. The Friday night's exhibition also gave us a positive hint that we are more inclined to have a wonderful artificial intelligence world. Machines will become more intelligent and autonomous. They will do more work for us humans and bring us Lead to a new world that even exceeds our imagination.

| Deep (learning) dreamsToday, including Google, Facebook, Twitter and other companies, artificial neural networks have been widely used in automatic image recognition, voice recognition of smart phones, and intelligent translation between different languages. If you enter enough photos of your relatives to the machine, the machine will learn to recognize your relatives. This is how Facebook recognizes faces in your uploaded photos. Now, through this “Generator†called “Deep Dream†, Google successfully subverts these neural networks . The machine no longer recognizes pictures, but creates pictures.

Google will call this achievement "Inceptionism" to pay tribute to Leonardo's 2010 film "Inception", which depicts a way to implant our ideas in sleep. Technology in human consciousness. Although this may not be the best metaphor, this new technology is exactly what it takes to show us the robot's dream.

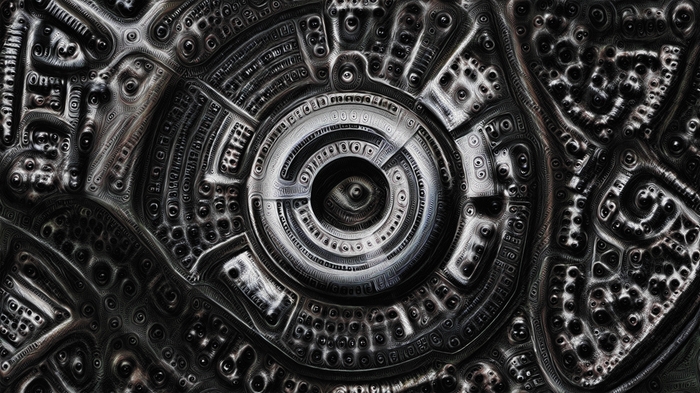

In order to train the “brains†of “deep dreamsâ€, we first need to input photos or other images. Artificial neural networks will look for similar patterns in these images, and repeated repetitions will enhance this pattern. "This will create a feedback loop: if a cloud looks a bit like a bird, artificial neural networks will make it look more like a bird," Google's staff first wrote in a blog post. Speaking when the project was announced. "This will in turn make the artificial neural network more able to identify birds in the next step until a bird with rich details suddenly appears in front of your eyes."

The output of this method is very charming, but it is also a bit disturbing. If you enter your photos into the artificial neural network, if it finds that there are some dogs on your face, it will turn this part of your face into a dog. "It's like the neural network creates hallucinations," said Steven Hansen, an intern at Google’s DeepMind AI London Lab. "It looks like dogs everywhere!" Or, if you enter a random noise picture for the artificial neural network It may generate a tree or a tower, or an entire tower of cities. In the same noisy picture, it may find blurred images of a pig and a snail, which will produce a terrible new creature that combines the features of both. Imagine that it was like a machine taking hallucinogens .

| Virtual Art (Virtually Art)For developer Alexander Alexander Mordvintsev, the vision for the initial development of this technology was to better understand how neural networks behave. Although artificial neural networks have demonstrated to the world its powerful capabilities, there are still some mysteries that people have not solved in their behavior. We cannot fully understand what really happened in this neural network that combines hardware and software. Mordvintsev and other researchers are still working on artificial neural networks to fully understand them. At the same time, another Google engineer, Mike Tyka, took a look at the ability to create artwork using this technology. While studying artificial neural networks for Google, he was also a sculptor. He sees this technology as a way to combine technology and art.

Artists like Tyka, who are responsible for inputting selected images to artificial neural networks, can adjust certain behaviors of the artificial neural network to the appropriate mode. They can even retrain them so that they recognize new patterns and release the seemingly infinite possibilities. Some of the works generated by this set of technologies look very similar, especially in the works on spirals, dogs and trees. However, some of the works have taken a risk in the direction of their own choice, showing a scene through the desolate land and the icy machines.

On Friday night, four works of art created by Artificial Neural Network, which Tykad was responsible for, were successfully auctioned. They are "Castles in the Sky With Diamonds", "Ground Still State of God's Original Brigade", "Carboniferous Fantasy", " Babylon of the Blue Sun shines." Through the entire gallery, these weird paintings are accompanied by strange visual effects. These unique paintings are not surprising. According to Joshua, the host who co-curated the exhibition, these names were chosen by the artificial neural network that created the artworks. A New York University graduate named Ross Goodwin used the same artificial neural network technology to generate names for Tyka's work.

For Steven Hansen, these automatically generated paintings are not a big leap forward. "I feel like an advanced version of PhotoShop," he said. But at the very least, DeepDream symbolizes a huge change in this area, and the machine itself can do more . On Google search engine, this change becomes more and more obvious. While the machine does more work, it means that our human role in search engines will become even less, or at least that we humans are getting farther and farther away from the decision to search results.

Moreover, this gap is not only reflected in Google's search engine, but also spans many areas of online services and technologies . On Friday, in a corner of the gallery, Google officials invited visitors to wear their paper VR devices to explore the deeper “Deep Dreamâ€. Cardboard VR devices show people a small space for a realistic “simulation universeâ€. Moreover, this technology is in a period of rapid development. Therefore, we can make no exaggerated predictions that one day, the machine will be able to create many larger virtual worlds on its own. Clay Bavor, the head of Google's VR project, not only appeared as a visitor to the exhibition, he was also one of the sponsors and sponsors behind the weekend exhibition, and Joshua To was also working in the Google VR department.

As you can see, Hans Holbien uses technology to complete his artistic creation, and this line of thinking will completely change some areas and cause profound changes.

Lei Feng Network (search "Lei Feng Net" public concern) Note: Reprinted please contact us to authorize, and retain the source and author, not to delete the content.