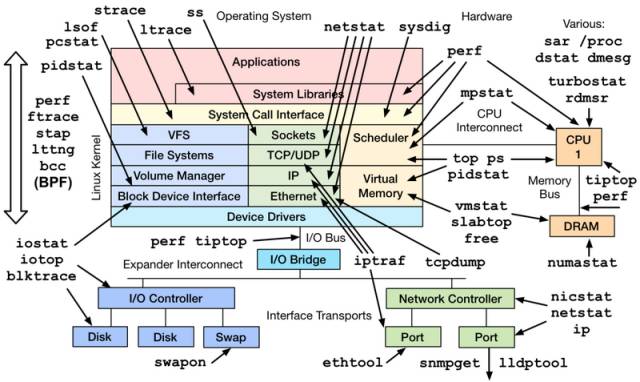

A server based on the Linux operating system also runs a variety of parameter information. Generally speaking, the operation and maintenance personnel and system administrators will be extremely sensitive to these data, but these parameters are also very important for developers, especially when your program is not working, these traces will often help quickly locate the tracking problem. .

Here are just a few simple tools to view the relevant parameters of the system, of course, many tools also work by analyzing the data under processing / proc, / sys, and those more detailed and professional performance monitoring and tuning may also require more professional Tools (perf, systemtap, etc.) and technology can be completed. After all, system performance monitoring is itself a university.

A, CPU and memory class

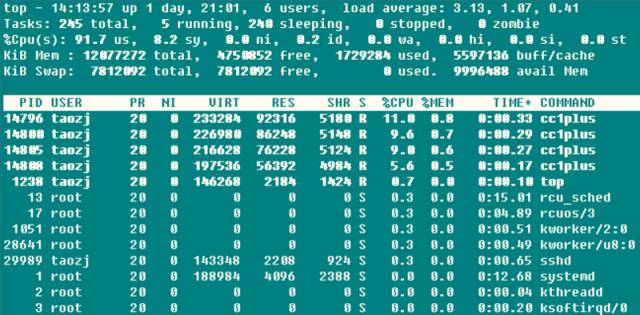

1.1 top

➜ ~ top

The three values ​​after the first line are the average loads of the system at the previous 1, 5, and 15, and it can also be seen that the system load is a rising, steady, and declining trend. When this value exceeds the number of CPU-executable units, it means that the CPU The performance has become saturated as a bottleneck.

The second line counts the task status information of the system. It's natural to say that running does not have to say anything, including being running on a CPU and being scheduled to run; sleeping is usually a task that waits for an event (such as an IO operation) to complete. Subdivisions can include interruptible and uninterruptible types; stopped are paused. Tasks, usually send SIGSTOP or Ctrl-Z on a foreground task can be suspended; zombie zombie task, although the process terminates the resources will be automatically recovered, but the task descriptor with the exit task needs to be accessed by the parent process to release, this process Shown as defunct state, regardless of whether the parent process exits prematurely or does not wait for calls, this kind of process should pay special attention to whether the program is designed incorrectly. The CPU usage of the third row depends on the type:

(us) user: The time spent by the CPU in the low nice value (high priority) user state (nice<=0). Normally, as long as the server is not very busy, most of the CPU time should be executed here.

(sy) system: The time the CPU is in Kernel mode. The operating system goes from the user mode to the kernel mode through a system call to perform a specific service; usually the value is smaller, but when the server executes When IO is more intensive, the value will be larger

(ni) nice: The CPU takes a low-priority run (nice>0) for a high nice (low-priority) user mode. By default, the newly started process nice = 0, is not counted here, unless you manually modify the program's nice value through renice or setpriority()

(id) idle: the time spent by the CPU in the idle state (executing the kernel idle handler)

(wa) iowait: waiting for IO to complete

(hi) irq: the amount of time the system spends processing hardware interrupts

(si) softirq: the time spent by the system to process soft interrupts, remember soft interrupts are divided into softirqs, tasklets (actually the former is a special case), work queues, do not know where is the statistical time, after all, the implementation of work queues It's not an interrupt context

(st) steal: It makes sense in the virtual machine case because the CPU under the virtual machine also shares the physical CPU, so this time indicates that the virtual machine waits for the hypervisor to schedule the CPU time, which means that the hypervisor dispatches the CPU to this time. The other CPU executes, and the CPU resource of this period is "stolen". This value is not 0 on my KVM VPS machine, but it is only 0.1 this order of magnitude. Is it possible to judge the VPS oversale condition?

The high CPU usage means a lot of things, and it also points out that the CPU usage of the server is too high.

When the user occupancy rate is too high, usually some individual processes occupy a lot of CPU, this time it is easy to find the program through the top; if you suspect that the program is abnormal at this time, you can use the perf and other ideas to find the hot call function Further investigation

When the system occupancy rate is too high, if the number of IO operations (including terminal IOs) is high, the CPU usage rate of this part may be high, for example, on file servers, database servers, etc., otherwise (eg, >20%). ) It is likely that some parts of the kernel and driver modules have problems;

When the usage rate of nice is too high, it is usually intentional. When the initiator of the process knows that some processes occupy a higher CPU, the nice value will be set to ensure that the use requests of the CPU are not overwhelmed by other processes.

When the iowait occupancy rate is too high, it usually means that the IO operations of some programs are inefficient, or the performance of the IO corresponding devices is so low that the read and write operations take a long time to complete;

When the irq/softirq occupancy rate is too high, it is likely that some peripherals will have problems, resulting in a large number of irq requests. At this time, the /proc/interrupts file is used to investigate the problem.

When the steal usage rate is too high, the black-hearted virtual machine is oversold!

The fourth and fifth lines are physical memory and virtual memory (swap partition) information: total = free + used + buff/cache, buffers and cached Mem information are now summed together, but buffers and cached

The relationship of Mem has not been clearly stated in many places. In fact, by comparing the data, the two values ​​are the Buffers and Cached fields in /proc/meminfo: Buffers are block caches for raw disks, and are mainly used to cache file system metadata (such as superblock information, etc.) in raw block mode. , This value is generally relatively small (around 20M); Cached is a read cache for certain specific files, used to increase the file access efficiency, it can be said to be used for the file cache in the file system.

The avail Mem is a new parameter value, used to indicate how much memory space can be given to a newly opened program without swapping, roughly equivalent to free + buff/cached, which also confirms the above statement, free + buffers + cached Mem is the only physical memory available. Also, using swap partitions is not necessarily a bad thing, so the swap partition usage is not a serious parameter, but frequent swap in/out is not a good thing. This situation needs attention, and usually indicates the shortage of physical memory.

The last is a list of resource usage for each program, where CPU usage is the sum of all CPU core usage. Usually when the top is executed, the program itself will read a large number of /proc operations, so basically the top program itself will also be ranked first.

Top Although very powerful, it is usually used to monitor system information in real time on the console. It is not suitable for monitoring load information of the system for a long period of time (days, months). At the same time, it is also impossible to give statistical information for short-lived processes.

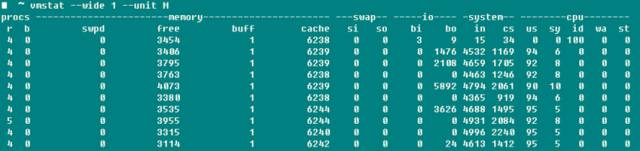

1.2 vmstat

Vmstat is another common system detection tool except top. The screenshot below is the system load that I used to compile boost with -j4.

r indicates the number of processes that can be run, the data roughly matches; and b indicates the number of uninterruptible sleep processes; swpd indicates the amount of virtual memory used, and the value of top-Swap-used is a meaning, and as the manual says, usually In the case of buffers, the number of buffers is much smaller than that of cached Mem, buffers are generally of the order of 20M; bi, bo of the io field indicate the number of blocks (s/s) received and sent to the disk per second; in of the system field indicates per second The number of system interrupts for the clock (including clock interrupts), cs indicates the number of context switches due to process switching.

Speaking of this, I think that when a lot of people tangled up to compile the linux kernel, is the -j parameter the CPU Core or the CPU Core+1? By compiling the boost and linux kernel at the same time with the modified -j parameter value, the vmstat monitoring is started at the same time. It is found that the context switch basically does not change in both cases, and only if the value of the -j value is significantly increased will the context switch increase significantly. It does not seem necessary. Too tangled this parameter, although I have not tested the specific compilation time. The data says that if it is not in the state of system startup or benchmark, the parameter context switch >100000 must be a problem.

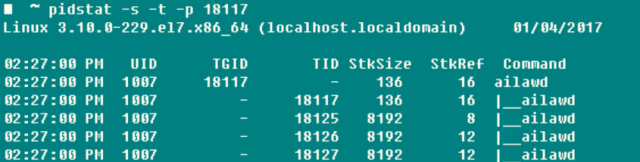

1.3 pidstat

If you want to conduct a comprehensive and specific tracking of a process, nothing is more appropriate than pidstat - information such as stack space, page fault, and active/passive switchover. The most useful parameter of this command is -t, which can list the details of each thread in the process.

-r: Displays page faults and memory usage. Page faults are programs that need to access a page that is mapped in virtual memory space but has not yet been loaded into physical memory. The two main types of page faults are:

Minflt/s refers to the minor faults, when the physical page that needs to be accessed is already in physical memory for some reason (such as sharing pages, caching mechanism, etc.), but there is no reference in the page table of the current process, MMU only needs to be set. The corresponding entry is fine, and the cost is quite small

Majflt/s refers to the major faults. The MMU needs to apply for a free physical page in the current available physical memory (if there is no free page available, you need to switch other physical pages to the swap space to release free physical pages). Then load the data from the outside into the physical page and set the corresponding entry. The cost is quite high, and the former has several data-level differences.

-s: The stack usage, including the stack space reserved by the thread for StkSize, and the stack space actually used by StkRef. Use ulimit -s to find the default stack space for CentOS 6.x is 10240K, while the default stack space for CentOS 7.x and Ubuntu is 8196K

-u: CPU utilization, parameters are similar to the previous

-w: The number of thread context switches, also subdivided into cswch/s active switch due to wait for resources and other factors, and statistics of passive switch caused by nvcswch/s thread CPU time

If you first get the pid of the program and then operate pidstat every time, it will be very troublesome, so this killer -C can specify a string, and then if the command contains this string, then the program's information will be printed Statistically, -l can show the complete program name and parameters ➜ ~ pidstat -w -t -C "ailaw" -l

So, if you look at a single, especially multi-threaded task, pidstat is better than the usual ps!

1.4 Others

When you need to monitor individual CPU conditions separately, in addition to htop, you can also use mpstat to see if the workload of each Core on the SMP processor is load-balanced and whether there are certain hot-spot threads occupying the Core. ➜ ~ mpstat -P ALL 1

If you want to directly monitor the resources occupied by a process, you can use top-u taozj to filter out other user-independent processes. You can also use the following methods to select. The ps command can customize the items to be printed:

While :; do ps -eo user,pid,ni,pri,pcpu,psr,comm | grep 'ailawd'; sleep 1; done

If you want to sort out the inheritance relationship, the following common parameters can be used to display the process tree structure, display more detailed than pstree detailed aesthetic

➜ ~ ps axjf

Second, the disk IO class

Iotop can intuitively display the real-time rate of disk reads of various processes and threads; lsof can not only display open information (users) of common files, but also open information of device files such as /dev/sda1, so when partitions fail When umount, you can use lsof to find out the status of the partition on the disk, and adding the +fg parameter can additionally display the file open flag flag.

2.1 iostat

➜ ~ iostat -xz 1

In fact, whether using iostat -xz 1 or using sar -d 1, the important parameters for the disk are:

Avgqu-s: The average length of waiting queues sent to device I/O requests. If the value >1 indicates that the device is saturated for a single disk, the logical disk is not applicable to multiple disk arrays.

Await (r_await, w_await): The average wait time (ms) for each device I/O request operation, including the sum of the time the requests are queued and serviced;

Svctm: The average service time (ms) to send to the device I/O request. If svctm is close to await, it indicates that there is almost no I/O waiting and the disk performance is very good. Otherwise, the disk queue wait time is long and the disk response is poor.

%util: The usage of the device, which indicates the percentage of working time per second used for I/O. The performance of a single disk decreases when %util > 60% (as reflected in the increase in await), and approaches 100%. When the device is saturated, except for a logical disk with multiple disk arrays;

Also, although the monitored disk performance is relatively poor, it does not necessarily affect the application's response. The kernel usually uses I/O asynchronously and uses read-write caching technology to improve performance, but this in turn corresponds to physical memory above. The restrictions are limited.

The above parameters are also available to the network file system.

Third, the network

The importance of network performance to the server is self-evident. The tool IPTRAF can intuitively display the sending and receiving speed information of the network card. The comparison is simple and convenient. Through sar-n DEV 1, similar throughput information can also be obtained. The network card is standard Rate information, such as fast Ethernet Gigabit network card, it is easy to check the utilization of the device.

In general, the transmission rate of the network card is not the most concern in the network development, but is for the specific UDP, TCP connection packet loss rate, retransmission rate, and network delay and other information.

3.1 netstat

➜ ~ netstat -s

Displays the overall data information for each protocol since the system was started. Although the parameter information is relatively rich and useful, but the cumulative value, unless the difference between the two runs to get the current system network status information, or use the watch eye intuitive numerical trends. So netstat is usually used to detect ports and connection information:

Netstat -all(a) -numeric(n) -tcp(t) -udp(u) -timers(o) -listening(l) -program(p)

-Timers can cancel the domain name reverse query to speed up the display; more commonly used

➜ ~ netstat -antp # list all TCP connections

➜ ~ netstat -nltp # List all local TCP listening sockets, do not add -a parameter

3.2 sar

Sar This tool is too powerful. What CPU, disk, and page swapping are all used. Here, -n is mainly used to analyze network activity. Although it is back in the network, it is subdivided into various levels such as NFS, IP, ICMP, and SOCK. For the data information of the protocol, we only care about TCP and UDP. The following commands, in addition to displaying the status of sending and receiving datagrams under regular conditions, include:

TCP➜ ~ sudo sar -n TCP,ETCP 1

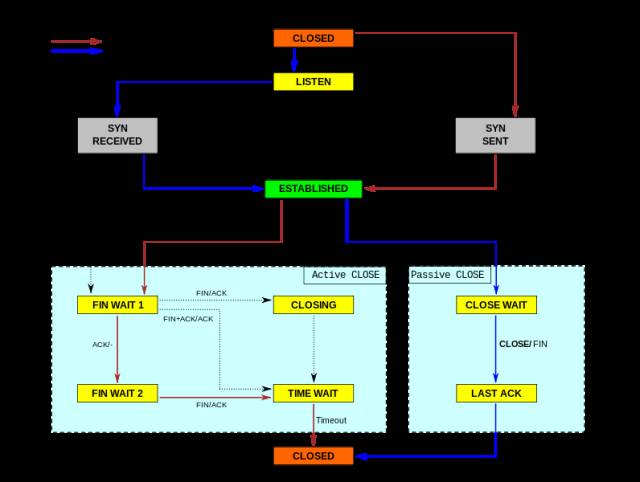

Active/s: Locally initiated TCP connection, such as via connect(), TCP status from CLOSED -> SYN-SENT

Passive/s: remote initiated TCP connection, eg via accept(), TCP state from LISTEN -> SYN-RCVD

Retrans/s(tcpRetransSegs): The number of TCP retransmissions per second. Usually, if the network quality is poor, or the packet is lost after the server is overloaded, retransmission will occur based on the TCP retransmission mechanism.

Isegerr/s(tcpInErrs): Error packets are received every second (for example, checksum fails)

UDP➜ ~ sudo sar -n UDP 1

Noport/s(udpNoPorts): Number of datagrams received per second but no application at the specified destination port

Idgmerr/s(udpInErrors): The number of datagrams received by the machine other than the ones above that cannot be dispatched

Of course, these data can explain the reliability of the network to a certain extent, but only when combined with specific business demand scenarios can it make sense.

3.3 tcpdump

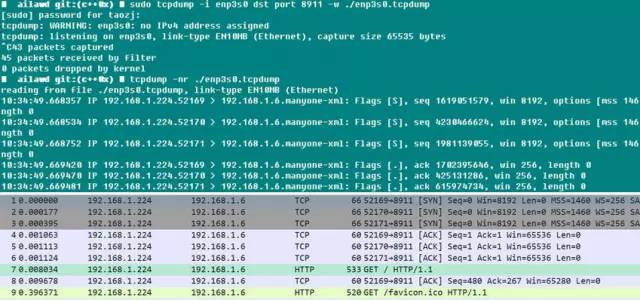

Tcpdump has to be said to be a good thing. Everyone knows that when using local debugging, they like to use wireshark, but how do you get problems on the online server?

The reference in the appendix gives the idea: to restore the environment, use tcpdump to capture packets, and when problems reappear (such as a log display or a status display), the capture can be ended, and tcpdump itself has -C/ The -W parameter can limit the size of the capture package storage file. When this limit is reached, the saved package data is automatically rotated, so the total number of captured packages is still controllable. After that, the data packet was taken off the line, and how to use wireshark to see what it looked like. Tcpdump Although there is no GUI interface, but the function of packet capture is not weak, you can specify the filter parameters such as network card, host, port, protocol, etc. The captured packet is complete with a timestamp, so the online program packet analysis is also It can be as simple as that.

The following is a small test. It can be seen that Chrome initiates three connections to the Web server automatically when it starts. Because the dst port parameter is limited here, the server's response packet is filtered out. It is opened with wireshark, and SYNC and ACK are established. The process is still very obvious! When tcpdump is used, it is necessary to configure the filter conditions for crawling as much as possible. On the one hand, it is convenient for the following analysis. On the other hand, tcpdump will affect the performance of the network card and system after it is turned on, which will affect the performance of online services.

SHENZHEN CHONDEKUAI TECHNOLOGY CO.LTD , https://www.szfourinone.com