University of Toronto researchers designed new algorithms to protect users' privacy by dynamically interfering with face recognition tools. The results show that their system can reduce the proportion of human face that can be detected from nearly 100% to 0.5%.

On some social media platforms, every time you upload a photo or video, its face recognition system tries to get more information from these photos and videos. For example, these algorithms extract data about who you are, where you are, and others you know, and these algorithms are constantly improving.

Now, face recognition's nemesis - "anti-face recognition" has come out.

A team of Professor Parham Aarabi and graduate student Avishek Bose of the University of Toronto developed an algorithm that can dynamically destroy face recognition systems.

Their solution utilizes a deep learning technique called adversarial training, which makes the two artificial intelligence algorithms counter each other.

Now, deep neural networks have been applied to a variety of issues, such as self-driving vehicles, cancer detection, etc. However, we urgently need to better understand the ways in which these models are vulnerable. In the field of image recognition, adding small, often imperceptible, interference to an image can fool a typical classification network into misclassifying images.

These disturbed images are called adversarial examples and they can be used to perform adversarial attacks on the network. There are already several ways to create countermeasure samples. They vary widely in complexity, computational cost, and the level of access required by the attack model.

In general, counterattacks can be classified based on the attack model's access level and confrontation goals. White-box attacks can fully access the structure and parameters of the model they are attacking; black-box attacks can only access the output of the attacked model.

A baseline method is the Fast Gradient Symbol Method (FGSM), which attacks the loss of the classifier based on the gradient of the input image. FGSM is a white-box approach because it requires access to the inside of the attacked classifier. Deep neural networks that attack image classification have several strong methods of confrontation attacks, such as L-BFGS, acobian-based Saliency Map Attack (JSMA), DeepFool, and carlin-wagner. However, these methods involve complicated optimization of the possible interference space, which makes them slow and computationally expensive.

Compared with the attack classification model, the detection of the target pipeline is much more difficult. State-of-the-art detectors, such as Faster R-CNN, use object schemes of different scales and locations and then classify them; the number of targets is several orders of magnitude larger than the classification model.

In addition, if the attacked scheme is only a small part of the total number, the disturbed image can still be correctly detected through different subsets of scenarios. Therefore, successful attacks require deception of all object schemes at the same time.

In this case, the researchers demonstrated that it is possible to quickly counterattack the most advanced face detectors.

Researchers have developed a "privacy filter" that can interfere with face recognition algorithms. The system relies on two kinds of AI algorithms: one to perform continuous face detection and the other to destroy the former.

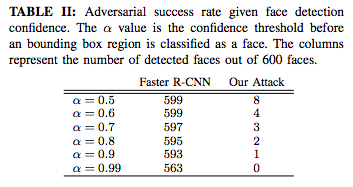

The researchers proposed a new attack method for face detectors based on Faster R-CNN. This method generates a small perturbation which, when added to the input face image, results in the failure of the pre-trained face detector.

In order to generate interference, the researchers proposed a generator for training based on pre-trained Faster R-CNN face detectors. Given an image, the generator will generate a small disturbance that can be added to the image to fool the face detector. The face detector only trains offline on undisturbed images, so it does not know the existence of the generator.

Over time, generators have learned to create interference that can effectively deceive the face detectors it trains. Generating a countermeasure sample is quite fast and cheap, and even cheaper than FGSM, because creating an interference for the input only requires a forward pass after the generator has been fully trained.

Two neural networks confront each other and form a "privacy" filter

The researchers designed two neural networks: the first to identify faces, and the second to identify face tasks that interfere with the first neural network. The two neural networks constantly confront each other and learn from each other.

The result is a instagram-like "privacy" filter that can be applied to photos to protect privacy. The secret is that their algorithm has changed some of the specific pixels in the photo, but these changes are barely noticeable to the human eye.

"Interfering AI algorithms can't 'attack' what the face's neural network is looking for." Bose, lead author of the project, said: "For example, if the detection network is looking for the corner of the eye, the interference algorithm will adjust the eye corners, making The corners of the eye are less visible. The algorithm causes very slight disturbances in the photo, but for the detector, these disturbances are enough to deceive the system."

Algorithm 1: Challenge Generator Training

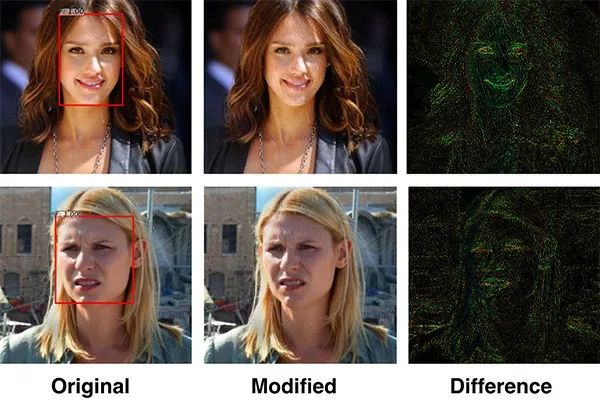

Given face detection confidence against success rate. The alpha value is the confidence threshold before the bounding box area is classified as a face, and the two right columns indicate the number of faces detected in 600 photographs.

The researchers tested their system on a 300-W face dataset that contained more than 600 faces in multiracial, different lighting conditions and background environments and was an industry standard library. The results show that their system can reduce the proportion of human face that can be detected from nearly 100% to 0.5%.

The proposed pineline against the attack, in which the generator network G creates image condition disturbances to deceive the face detector.

Bose said: "The key here is to train two neural networks to fight each other - one to create an increasingly powerful face detection system, and the other to create a more powerful tool to disable face detection." The team's research will be held soon 2018 IEEE International Multimedia Signal Processing Symposium presented and demonstrated.

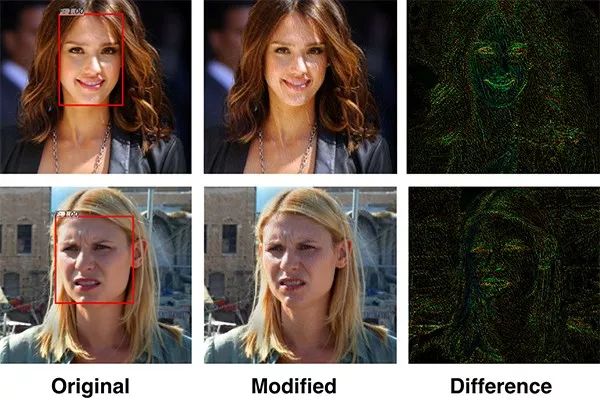

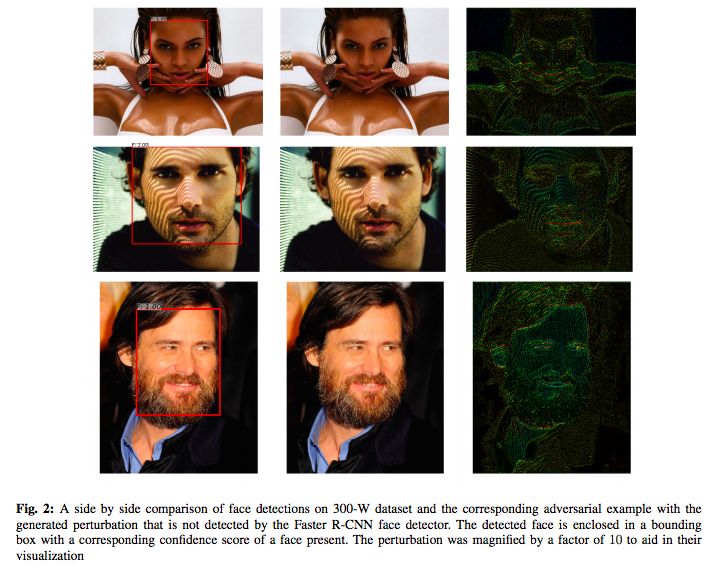

The face detection of the 300-W data set is compared with corresponding counter samples, which have generated interference and are not detected by the Faster R-CNN face detector. The detected face is surrounded by a bounding box with a corresponding confidence value. For visualization, the interference is magnified by 10 times.

In addition to disabling facial recognition, this new technology also interferes with image-based search, feature recognition, emotional and racial judgment, and other features that automatically extract face attributes.

Next, the team wants to expose this privacy filter through the app or website.

"Ten years ago, these algorithms had to be defined by humans, but now neural networks are learning on their own - you don't need to provide them with anything except training data," Aarabi said. "Ultimately, they can do something extraordinary and have great potential."

Wireless earphones are divided into three parts.The first part is the sound source,the second part is the receiver, the third part is the headset part,the function of this part is mainly used to convert the signal sent by the mobile phone or receiver into sound and then transmitted to the ears of people. Wireless earphones and wired earphones compared,the difference in sound quality is not big. Wireless is more convenient,mainly in the middle of the line is replaced by radio waves. Compared with wired earphones, wireless earphones have these advantages, abandon the trouble of wired,do the real wireless structure, freedom of movement.A variety of ways to use, a variety of functions, sound quality, noise reduction, call has been greatly improved.It is equipped with a portable case with both charging and storage functions. It is very convenient to charge the dead earphones automatically by putting them into the case.

The latest wireless headset has a professional learning scheme,can learn the functions of various home appliances remote control (TV, VCR, DVB, DVD, etc.),can also be used as a digital SET-top box remote control. Wirelessly listen to most electrical appliances with audio output. Using wireless earphones, you can enjoy exciting programs freely when the TV is silent.Place THE TRANSMITTER NEAR THE elderly, infants, patients and other people in need of care. Use the receiver to hear the voice of the person being cared for, and care is convenient and easy. Adopt THE ORIGINAL IMPORTED TRANSMITTING TUBE AND CORE technology, HIGH sensitivity ANTI-interference, strong signal, outstanding effect, effective range (less than) 30 meters, can be received by the wall.

Wireless Gaming Headphone,Wireless Gaming Earphones,Wireless Gaming Headset,Wireless Gaming Headset With Mic

Henan Yijiao Trading Co., Ltd , https://www.yjusbhubs.com