Abstract: This paper expounds the audio embedding technology in TV system, audio and video signal standard, embedding format and application embedded in TV system. Through the use of audio embedding technology, the transmission cost of the TV signal is greatly reduced, the transmission quality of the audio signal is improved, the problem of unsynchronization in the transmission of audio and video signals is solved, the lossless transmission of audio and video is realized, and the quality of the television program is improved.

This article refers to the address: http://

0 Preface:

With the rapid development of the TV industry, digital TV technology has also developed by leaps and bounds. In the process of analog-to-digital conversion and transmission of television signals, the processing methods and characteristics of video signals and audio signals are significantly different, resulting in digital video lags behind digital audio. The performance is not synchronized with the country and the voice.

The audio embedding technology allows audio and video signals that have to be transmitted separately in the past to be combined and transmitted in a single video cable, which greatly simplifies the hardware overhead and audio and video signal routing strategies required for audio and video interconnection in the studio, and enables audio and audio. Simultaneous transmission and playback of video.

In this paper, the SD I (Serial DIGItal In2ter-face) signal defined by the SMPTE 259M standard is used as a digital video standard. The biggest advantage is that it provides a large number of auxiliary data areas (An-cillary Data Space), which can be used to embed audio signals and other useful signal. The auxiliary data area of ​​the existing video format can accommodate up to 16 channels of audio data, which is sufficient for most of the needs of current television systems.

1 Digital audio and video standards:

1. 1 AES/EBU digital audio signal:

The serial digital audio signal of the AES/EBU standard can transmit audio data of two channels. The two channels can be either stereo signals from the same sound source or two completely different single audio signals. The minimum transmission unit of the AES/EBU audio signal is a 64-bit flame. Each frame of data contains two 32-bit subframes, corresponding to one sample of each of the two channels of audio. Each sub-frame consists of 4 bit preamble, 4 bit auxiliary data, 20 bit audio data and 4 bits of other data. According to the standard, it can support 48 kHz, 44. 1 kHz, 32 kHz, 3 sampling rates and 16 to 24 bit. Sample word length. The sub-frame format is shown in Figure 1.

Figure 1 Frame format of AES/EBU serial audio

In 1 subframe, 4 bits of auxiliary data are used to accommodate portions of the audio sample word that exceed 20 bits. When the audio word length is 21 to 24 bits, the 4th bit is the least significant bit. When the 20-bit code word length is sufficient, 8 to 27 bits are sample codes, and 4 to 7 bits are auxiliary sample bits. V, U, C, and P are valid bits, user data bits, channel status bits, and parity bits, respectively.

In a continuous AES/EBU serial audio data stream, the audio frames are further organized into 192-frame data packets. The first subframe of the first frame of the data packet has a preamble of Z, and all other subframes 1 and subframes. The preambles of 2 are X and Y, respectively. The channel status bits of two audio channels in one data packet are assembled into a 192-bit data group, which gives important information related to the audio signal such as audio sample word length, sampling frequency, and the like. The format information of the user data bits.

1. 2 component video signal:

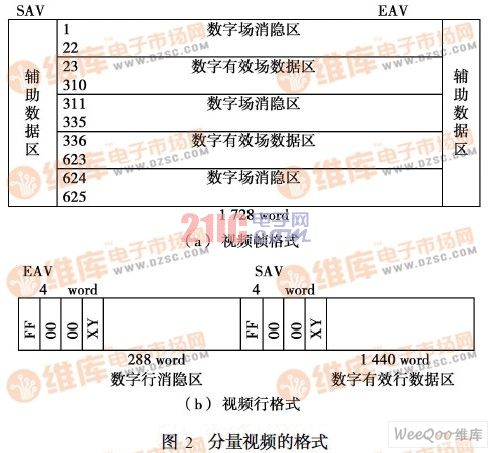

The 625-line standard digital component video signal format is shown in Figure 2a.

A frame of images has 625 lines, and each line has 1 728 10-bit data words. The first game of 1 to 312, the second game of 313 to 625. The 5th row of the odd field and the 318th row of the even field are reserved for error detection processing (ErrorDetecTION and Handlin9, EDH), and the 6th row of the odd field and the 319th row of the even field are reserved for the vertical interval switching point (Vertical IntervalSwitching) Point), in addition to the total of 621 lines of auxiliary data space can be used to embed audio data.

The data format of the component video line is shown in Figure 2b. One line of video consists of 1 728word. The first 288 word is the digital line blanking area, and the last 1 440word is the digital valid line data area. The beginning and end of the line blanking area each have a 4 word Timing Reference Signal (TRS), which is EAV (End of Active Video) and SAV (STartof Active Video). The embedded audio data is placed between the EAV and SAV of each line of the video line blanking area and must be placed immediately following the EAV.

1. 3 auxiliary data space:

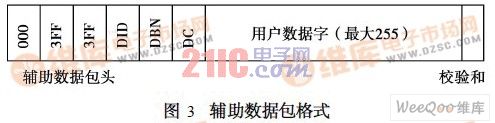

The auxiliary data packet format of the component video signal is shown in Figure 3. The first 3 data words are sync headers. All 0 and all 1 data words in the video signal are reserved for the synchronization flag, so the receiving end can reliably recognize the audio data embedded in the video through this header. The subsequent D ID (Data ID) defines the specific type of the auxiliary data packet, and the receiving end can correctly interpret the user data according to the D ID. DBN (Data Block Number) is a counter that implements the continuity indication. For all auxiliary data packets with the same D ID number, DBN is incremented by 1 to implement continuous counting, so that the receiving end can judge whether the data is interrupted. The DC (Data Count) gives the number of subsequent user data, and finally the checksum from the D ID to the end of the user data. The receiver can check whether the received packet contains an error by using the checksum.

1. 4 audio embedding format:

The AES/EBU digital signal is packed according to a certain format, and is filled into the video line blanking area to implement audio embedding. According to the standard, at least 2 channels of audio can be embedded, up to 16 channels. There are three types of audio packets embedded in the video data: audio control packets, audio data packets (Audio Control Packet (ACP) Audio Data Packet (ADP), and Extended Data Packet (EPP). They should be embedded as evenly as possible into the video signal to reduce the resources used by the buffers in the system.

The audio control packet format is shown in Figure 4a. The audio to be embedded is 1 audio group every 4 channels, and each audio group has its own independent control package. The control packet is transmitted once in the video line behind each Video Switching Point. It contains information such as sample rate, audio channel validity indication, and audio processing delay. The location of the control packet in the secondary data space must precede any other audio package.

The audio data packet (Fig. 4b) carries the valid audio signal. The 20-bit audio sample code and the V, U, C 3 bits in each AES/EBU audio sub-frame are mapped into consecutive packets in a certain format through a certain format. 3 word. The audio data from the 4 sub-frames of the 2 audio pairs are arranged in order, and the data packet can carry one or more samples of up to 4 AES/EBU audio signals.

When the audio sample word length exceeds 20 bits, the 4 bit auxiliary data in the audio sub-frame is packed into the extended data packet (Fig. 4c), and a total of 8 bits of auxiliary data for each 2 sub-frames are combined into one valid word in the extended package.

Audio packets and extensions must be placed in each line of video immediately after the EAV. The extension package must be placed in the same auxiliary data area as the associated audio data packet, immediately after the audio data packet.

2 Conclusion:

Through the application of audio embedding technology in TV program recording and broadcast, the audio and video lossless transmission is realized, the transmission quality of audio signal is improved, the problem of unsynchronization in audio and video signal transmission is solved, and the transmission of TV signal is greatly reduced. The cost has improved the quality of TV programs.

The 7-inch tablet can be used as the golden size of a tablet computer. It is small and portable. It can be used at home and outdoors. You can browse the web, watch videos and play games. It is a household artifact. Although the size of the 7-inch tablet is inclined to the tablet, the function is more inclined to the mobile phone, so it can also be used as a substitute for the mobile phone. Compared with other sized tablets, the 7-inch tablet has obvious advantages in appearance and weight. Both the body size and the body weight have reached a very reasonable amount.

1.In appearance, the 7 inch tablet computer looks like a large-screen mobile phone, or more like a separate LCD screen.

2.In terms of hardware configuration, the 7 inch tablet computer has all the hardware devices of a traditional computer, and has its own unique operating system, compatible with a variety of applications, and has a complete set of computer functions.

3.The 7 inch tablet computer is a miniaturized computer. Compared with traditional desktop computers, tablet computers are mobile and flexible. Compared with Laptops, tablets are smaller and more portable

4.The 7 inch tablet is a digital notebook with digital ink function. In daily use, you can use the tablet computer like an ordinary notebook, take notes anytime and anywhere, and leave your own notes in electronic texts and documents.

7 Inches Tablet Pc,Quad Core Tablet 7 Inch,7 Inch Gaming Tablet,Supersonic Tablet 7 Inch

Jingjiang Gisen Technology Co.,Ltd , https://www.gisentech.com